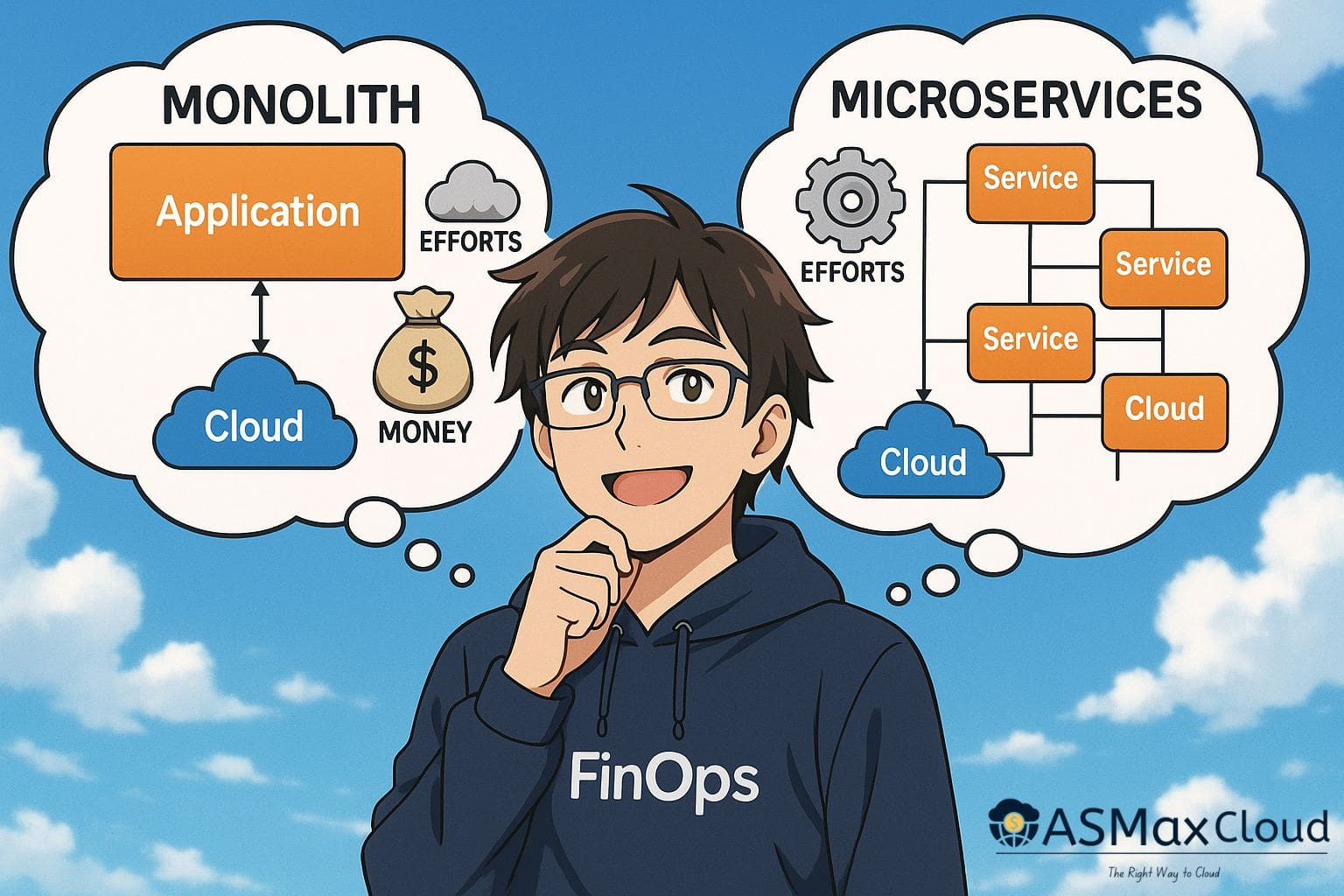

In cloud-native environments, it’s tempting to default to microservices as the architectural gold standard. Teams often adopt them early to improve developer velocity, increase modularity, and support independent deployments. But from a FinOps standpoint, this decision can quietly introduce a layer of technical and financial debt that scales faster than the systems themselves.

In this case study, I’ll break down how microservices—when introduced too early or without guardrails—can degrade both operational efficiency and cost visibility, and why a modular monolith might be the smarter choice at earlier stages of growth.

When Microservices Work Against You

At first glance, breaking an application into independent services sounds ideal. Teams get autonomy, deployments become more targeted, and change cycles are streamlined.

However, the operational reality is very different.

When each service is independently built, deployed, monitored, and maintained, the number of moving parts explodes. This introduces a real cost—not just in engineering effort, but in the underlying cloud infrastructure and tooling required to support it. Logging, tracing, CI/CD pipelines, IAM permissions, container orchestration, testing environments—each layer must now be duplicated or scaled for multiple services.

From a FinOps perspective, this means:

Fragmented cost centers: Each microservice consumes its own set of compute, storage, and network resources. Without proper tagging and alignment to business units, these become hard to attribute.

Poor cost visibility: Distributed systems mean distributed spending. It’s difficult to identify what’s actually driving cost unless there’s tight control over observability and ownership.

Orphaned resources: Services often get spun up faster than they’re retired. Idle compute, unutilized databases, and outdated pipelines sit silently accumulating cost.

The False Economy of Early Microservices

Teams sometimes break up a monolith not because they need to—but because they can. What’s missed in that decision is the cost multiplier effect that follows.

Each service brings with it:

Its own deployment infrastructure

Its own monitoring and alerting

Its own security posture

Its own cloud footprint

Unless you’re operating at a scale where independent teams are blocked by shared deployments, these costs often outweigh the benefits. The system becomes harder to reason about, harder to debug, and ironically—slower to evolve.

And when changes span multiple services, the effort multiplies. Instead of one code change and deployment, now it’s three. Or five. Each requiring review, integration, and coordination.

The Modular Monolith Advantage

What often gets overlooked is how far a modular monolith can take you when paired with strong boundaries and good engineering practices. In this setup, you keep everything in a single codebase and deployment unit—but structure it internally into clear domains and ownership areas.

From a FinOps lens, this model has major advantages:

Unified cost tracking: One application means fewer moving parts and easier attribution.

Centralized optimization: Shared compute, shared databases, and shared observability tools are more efficient and easier to manage.

Faster iteration: Teams can make cross-domain changes with less overhead, leading to faster innovation and fewer integration blockers.

Scaling Architecture and FinOps Together

The goal isn’t to avoid microservices entirely—it’s to introduce them when the organization is ready. That readiness isn’t just about developer headcount; it’s about having:

A clear ownership model for each service

Solid observability and cost monitoring

Dedicated FinOps practices to ensure spend is visible, intentional, and mapped to business value

Only then do microservices deliver on their promise of scalability—without becoming a silent cost sink.

Bringing Operational Costs Into Cloud Cost Efficiency KPIs

This case study isn’t just about architecture—it’s a prompt to evolve how we think about cost efficiency in the cloud. Architectural decisions like choosing microservices over a modular monolith don’t just impact scalability or developer experience—they fundamentally alter the operational cost profile of your platform.

Yet in many organizations, these operational costs remain invisible in standard FinOps dashboards.

To manage cloud costs effectively, we need to go beyond instance hours and storage volume. We need to ask:

What is the cost of maintaining and supporting this architectural pattern?

How much overhead are we introducing per deployment, per service, per team?

Are we creating cost fragmentation that makes unit economics harder to calculate?

By linking architectural choices to Cloud Cost Efficiency KPIs, such as:

Cost per deployment

Cost per service

Cost per business function

Cost of engineering effort to support infrastructure

we move from reactive cost optimization to proactive cost governance.

This mindset shift is where FinOps becomes truly strategic. It’s not just about rightsizing compute or shutting down idle resources. It’s about designing systems—both technical and operational—that make cost visible, measurable, and aligned with business outcomes.